Duke University AI Uncovers Simple Rules in Complex Systems

Researchers at Duke University have developed a novel artificial intelligence framework capable of extracting simple, interpretable mathematical rules from highly complex, dynamic systems. This AI analyzes time-series data to reduce thousands of interacting variables into compact, linear equations that accurately model real-world behavior. The method, applicable across physics, engineering, climate science, and biology, offers a powerful new tool for scientific discovery, particularly in domains where traditional equations are unknown or too cumbersome to derive. It represents a significant step toward creating 'machine scientists' that can assist human researchers in understanding the fundamental principles governing natural and technological phenomena.

In the quest to understand the natural world, scientists have long sought to distill complexity into simplicity. From Newton's laws of motion to Einstein's theory of relativity, the greatest breakthroughs often involve finding elegant rules behind seemingly chaotic phenomena. Today, a new frontier is being explored at the intersection of artificial intelligence and dynamical systems. Researchers at Duke University have created an AI framework designed to do precisely this: uncover clear, readable mathematical rules hidden within some of the world's most complex systems. This innovation promises to extend human scientific reasoning into domains where traditional physics-based modeling falls short.

The Core Innovation: From Chaos to Clarity

The fundamental challenge addressed by the Duke AI is the 'curse of dimensionality' in nonlinear dynamical systems. Many real-world systems—from global climate patterns to neural circuits in the brain—involve hundreds or thousands of variables interacting in intricate ways. While we can collect vast amounts of data on how these systems evolve over time, translating that data into a comprehensible model has been a monumental task. The new AI framework, detailed in a study published in npj Complexity, provides a methodological bridge. It takes time-series experimental data and applies a combination of deep learning and physics-inspired constraints to identify the most meaningful patterns of change.

The outcome is a dramatically simplified model. The AI reduces the system to a much smaller set of hidden variables that still capture its essential behavior, producing a compact, linear mathematical representation. According to the research, these resulting models can be more than ten times smaller than those generated by earlier machine-learning methods while maintaining reliable accuracy for long-term predictions. This compression is not just a technical achievement; it is the key to interpretability. A compact linear model allows scientists to connect the AI's findings back to established theoretical frameworks and human intuition.

Mathematical Foundation and Practical Application

The AI's approach is built upon a decades-old mathematical idea from Bernard Koopman, who demonstrated in the 1930s that complex nonlinear systems could, in theory, be represented using linear models. The practical hurdle has always been that such representations often require an impractically large number of equations. The Duke AI overcomes this by intelligently selecting the most informative dimensions from the data. As lead researcher Boyuan Chen explains, scientific discovery depends on finding simplified representations of complicated processes. This AI provides the tool to turn overwhelming data into the kind of simplified rules scientists rely on.

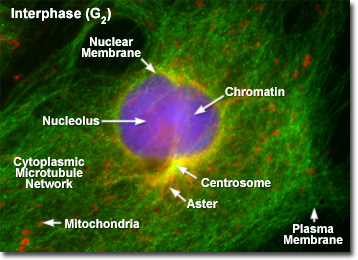

The framework's utility has been demonstrated across a diverse range of test cases. It successfully modeled the nonlinear swing of a pendulum, the behavior of electrical circuits, climate models, and biological neural signals. In each case, the AI identified a small number of governing variables. Beyond mere prediction, the system can pinpoint stable states, or 'attractors,' where a system naturally settles. Recognizing these landmarks is crucial for diagnosing whether a system is operating normally or veering toward instability—a capability with implications for maintaining complex infrastructure, ecosystems, or financial markets.

Implications for the Future of Scientific Discovery

The development signals a shift in the role of AI from a pattern-recognition tool to a potential partner in discovery. The researchers are careful to frame it not as a replacement for physics, but as an extension of human capability. As noted in the source material, the method is especially valuable when traditional equations are unavailable, incomplete, or too complex to derive manually. It allows reasoning with data in the absence of a complete theoretical map.

Looking ahead, the Duke team envisions this work contributing to the development of 'machine scientists.' Future directions include using the AI to guide experimental design by actively selecting which data to collect to most efficiently reveal a system's structure. The researchers also plan to apply the method to richer data types like video and audio. This progression points toward a future where AI assists in uncovering the fundamental, often simple, rules that shape both the physical world and living systems, turning vast oceans of data into navigable streams of understanding.