The AI Scaling Cliff: Why Massive Infrastructure Investments May Not Pay Off

Recent MIT research suggests that the AI industry's massive infrastructure investments may be heading toward diminishing returns. As algorithms approach their scaling limits, the performance gains from increasingly larger models could narrow significantly over the next decade. This challenges the fundamental assumption behind hundred-billion-dollar compute deals signed by major tech companies, raising questions about whether efficiency improvements in smaller models might ultimately prove more valuable than brute-force scaling.

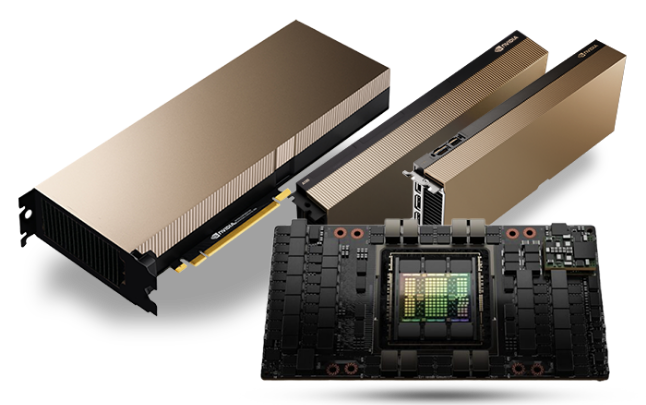

The artificial intelligence industry is experiencing an unprecedented infrastructure boom, with companies like OpenAI signing hundred-billion-dollar deals to build massive computing capacity. These investments are predicated on a core assumption: that AI algorithms will continue to improve linearly with increased computational scale. However, emerging research from MIT suggests this assumption may be fundamentally flawed, potentially leading the industry toward what researchers call a "scaling cliff."

The Scaling Limits Hypothesis

According to research from MIT, the biggest and most computationally intensive AI models may soon offer diminishing returns compared to smaller, more efficient alternatives. The study maps scaling laws against continued improvements in model efficiency, revealing a concerning trend: it could become increasingly difficult to extract significant performance improvements from giant models, while efficiency gains could make models running on more modest hardware increasingly capable over the next decade.

Neil Thompson, a computer scientist and professor at MIT involved in the study, explains the implications: "In the next five to 10 years, things are very likely to start narrowing." This prediction challenges the current industry trajectory, where companies are investing unprecedented sums in computational infrastructure based on the assumption that bigger models will continue to deliver breakthrough capabilities.

The Efficiency Alternative

Recent developments in AI efficiency provide compelling evidence that the scaling-first approach may not be optimal. The emergence of models like DeepSeek's remarkably low-cost alternative in January served as a reality check for an industry accustomed to burning massive amounts of compute. These efficiency breakthroughs demonstrate that significant capabilities can be achieved without the astronomical computational costs currently being invested.

Thompson emphasizes the importance of balancing scale with algorithmic refinement: "If you are spending a lot of money training these models, then you should absolutely be spending some of it trying to develop more efficient algorithms, because that can matter hugely." This balanced approach could yield better returns than the current singular focus on scaling.

Industry Implications and Warnings

The timing of this research is particularly relevant given the current AI infrastructure boom. OpenAI and other US tech firms have committed to massive infrastructure deals, with OpenAI's president Greg Brockman recently proclaiming that "the world needs much more compute" while announcing a partnership with Broadcom for custom AI chips.

However, skepticism is growing among financial experts. Jamie Dimon, CEO of JP Morgan, recently warned that "the level of uncertainty should be higher in most people's minds" regarding these massive AI investments. The concern is amplified by the rapid depreciation of GPU infrastructure, which constitutes roughly 60 percent of data center construction costs.

Strategic Considerations

The MIT research suggests that companies heavily invested in scaling may face strategic disadvantages as efficiency improvements accelerate. Hans Gundlach, the MIT research scientist who led the analysis, notes that the predicted trend is especially pronounced for reasoning models currently in vogue, which rely more heavily on extra computation during inference.

This raises important questions about innovation strategy. By concentrating resources on scaling existing approaches, companies might miss opportunities emerging from academic research exploring alternatives to deep learning, novel chip designs, and even quantum computing approaches. These fringe areas, which originally spawned today's AI breakthroughs, could potentially yield the next generation of AI capabilities.

As the industry stands at this potential inflection point, the wisdom of massive infrastructure investments becomes increasingly questionable. The research suggests that a more balanced approach—combining reasonable scaling with significant investment in algorithmic efficiency—might prove more sustainable and productive in the long term.