The Enshittification Threat: How AI Could Follow Tech Platforms' Downward Spiral

Cory Doctorow's theory of 'enshittification' describes how tech platforms initially serve users well but gradually degrade quality to maximize profits. As artificial intelligence systems become more powerful and embedded in daily life, they face similar risks of value erosion. This article explores how AI's current 'good to users' phase could devolve into biased recommendations, hidden advertising, and degraded performance as companies seek returns on massive investments.

The rapid advancement of artificial intelligence has brought unprecedented capabilities to our fingertips, from personalized restaurant recommendations to complex decision-making support. However, this technological progress comes with a familiar danger: the potential for what writer and tech critic Cory Doctorow terms "enshittification." This concept describes the predictable lifecycle where tech platforms start by serving users well, then gradually degrade quality to extract maximum value for themselves.

Doctorow's framework, which gained mainstream recognition after being named the American Dialect Society's 2023 Word of the Year, explains how companies like Google, Amazon, and Facebook initially compete by providing excellent user experiences. Once they establish market dominance, these platforms systematically reduce value for users while increasing profits through advertising, fee changes, and other extractive practices.

The AI Enshittification Risk

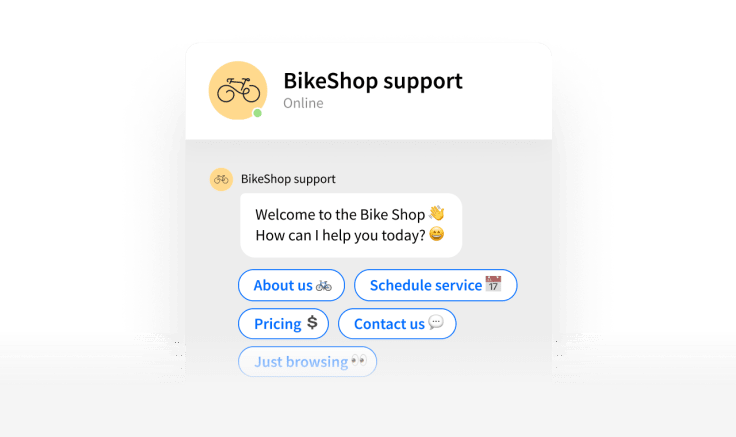

Artificial intelligence systems currently operate in what Doctorow identifies as the "good to users" phase. Companies like OpenAI are investing heavily to build trust and demonstrate value, as evidenced by reliable restaurant recommendations and helpful assistance with daily tasks. However, the massive capital investments required for AI development—potentially hundreds of billions of dollars—create tremendous pressure to generate returns.

The fundamental economics of AI create conditions ripe for enshittification. With only a few companies likely to dominate the field due to the enormous costs of developing large language models, these firms will eventually face the temptation to abuse their market position. As Doctorow notes in his writings, once companies can enshittify their products, they face "the perennial temptation to enshittify their products."

Potential Pathways to AI Degradation

The most obvious enshittification risk for AI involves advertising integration. While companies currently promise unbiased recommendations, the financial incentives to incorporate sponsored content are substantial. OpenAI CEO Sam Altman has already hinted at exploring "some cool ad product" that could represent a "net win to the user," while partnerships like OpenAI's deal with Walmart for in-app shopping suggest commercial pressures are mounting.

Beyond advertising, AI companies could employ other enshittification tactics familiar from existing tech platforms. These might include introducing tiered pricing that locks essential features behind higher paywalls, changing privacy policies to use user data for training without consent, or gradually reducing the quality of free services to push users toward premium offerings. The streaming service model—where Amazon Prime Video introduced ads despite originally being ad-free—provides a clear template for how AI services could evolve.

The Black Box Problem

AI systems face an additional enshittification risk due to their inherent complexity. The "black box" nature of large language models makes it difficult for users to detect when quality degradation occurs. As Doctorow explains, AI companies "have an ability to disguise their enshittifying in a way that would allow them to get away with an awful lot." This opacity means users might not immediately notice when recommendations become biased toward paying partners or when responses are subtly engineered to serve corporate interests rather than user needs.

The consequences of AI enshittification could be more severe than with previous technologies because people increasingly rely on AI for important decisions—from medical advice to financial planning to relationship guidance. When search engines become less useful or social media feeds prioritize engagement over quality, the impact is significant. When AI assistants provide biased guidance on life-changing matters, the stakes are substantially higher.

Resisting the Inevitable

While the economic pressures pushing toward AI enshittification are powerful, they are not necessarily inevitable. Companies like Perplexity have implemented clear labeling for sponsored content and made public commitments to maintain unbiased answers. Regulatory frameworks, user awareness, and competitive pressures could help maintain higher standards. However, the history of tech platforms suggests that without strong safeguards, the temptation to extract value often overcomes promises to serve users.

As AI continues to integrate into daily life, users and regulators must remain vigilant about maintaining transparency and accountability. The same technology that can honestly recommend a perfect restaurant in Rome could eventually steer users toward establishments that pay for placement. Recognizing this risk early—while AI is still in its relatively trustworthy phase—provides the best opportunity to establish protections before enshittification becomes entrenched.